Our development cycle is around 2 weeks per sprint, and we really packed as much improvement into this one as possible!

We're thrilled to announce the release of Vext v1.6, a significant leap forward in enabling developers and businesses to max out the potential of LLMs with greater ease, efficiency, and control.

Enhanced Endpoint Capabilities

Long Polling Support

One of the standout features in this update is the introduction of long polling for endpoints. If you're working with extensive LLM pipelines, you're likely familiar with the frustration of timeouts.

With long polling support, this ensures that you can retrieve the result without having to wait for the result upon fetching, giving your requests the time they need to process fully. This feature is a game-changer for those aiming to build more reliable and robust LLM-powered applications.

How it works

Include a new parameter,

long_polling, with a boolean value in your request body alongside the existing "payload"Upon submission, the system will respond with a unique

request_idTo check the status of your request, fetch the same endpoint again, this time providing only the

request_idpreviously receivedThe system will then return the current progress of your request

Repeat the status check process as necessary until you receive the final generated response

You can learn more about it on our help center article, or check out the API reference.

More Information Per API Call

The LLM pipeline endpoint now delivers an expanded set of data with each API call. This includes citations, file paths (with optional public access toggling), and referred chunks for those utilizing RAG. These enhancements open the door to creating more nuanced, credible, and feature-rich LLM applications.

Check out the API reference to learn more.

Enhanced Logging for Unmatched Transparency

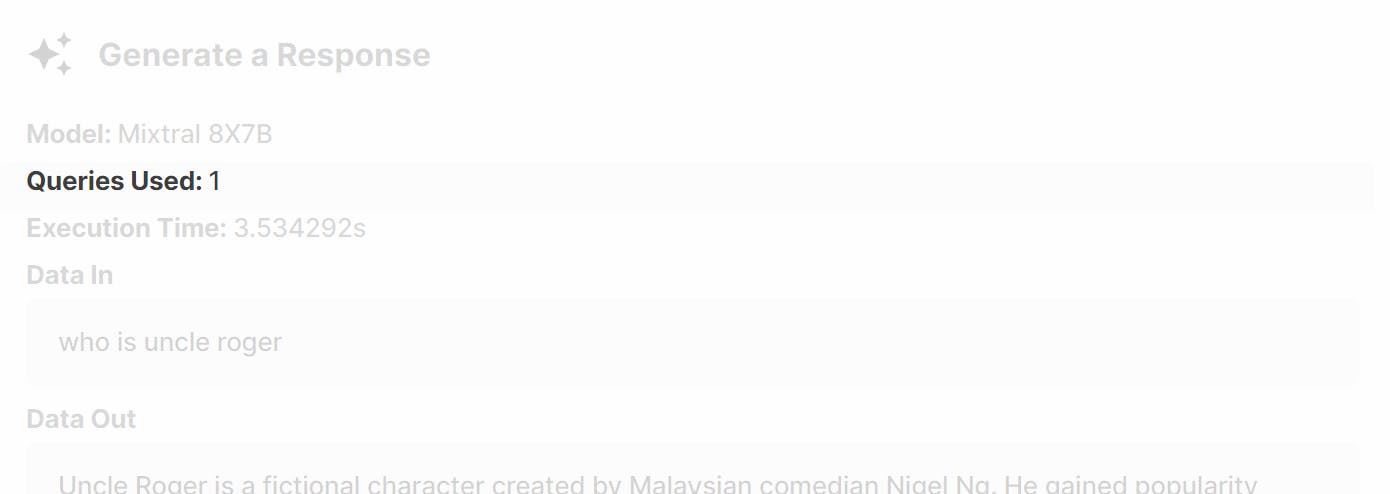

Query Consumption Visibility

For projects involving multiple LLMs or smart functions within an LLM pipeline, understanding resource utilization is crucial. The detailed logging that shows how many queries were consumed during each run, providing clear insights into your project's operational footprint.

Execution Time and Source Tracking

Moreover, the new logging capabilities extend to execution time visibility for each pipeline step and the sources used for RAG, including citations. This transparency ensures you can optimize performance and verify the credibility of generated content.

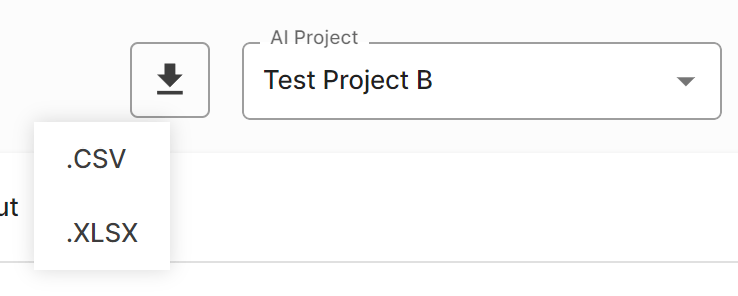

Log Export Options

Understanding the need for flexible data analysis, Vext 1.6 allows users to download logs in both .xlsx and .csv formats, catering to a wide range of analytical preferences.

Enhanced Data Source Management

Original and Processed Data Views

To offer users unprecedented control over their data, Vext 1.6 enables access to both the original files and the processed data views. This dual-view capability ensures that users can understand and leverage their data fully.

Public Access Toggling

Further enhancing data control, users can now toggle the public access setting for original files. This feature is essential for managing data privacy and sharing preferences seamlessly.

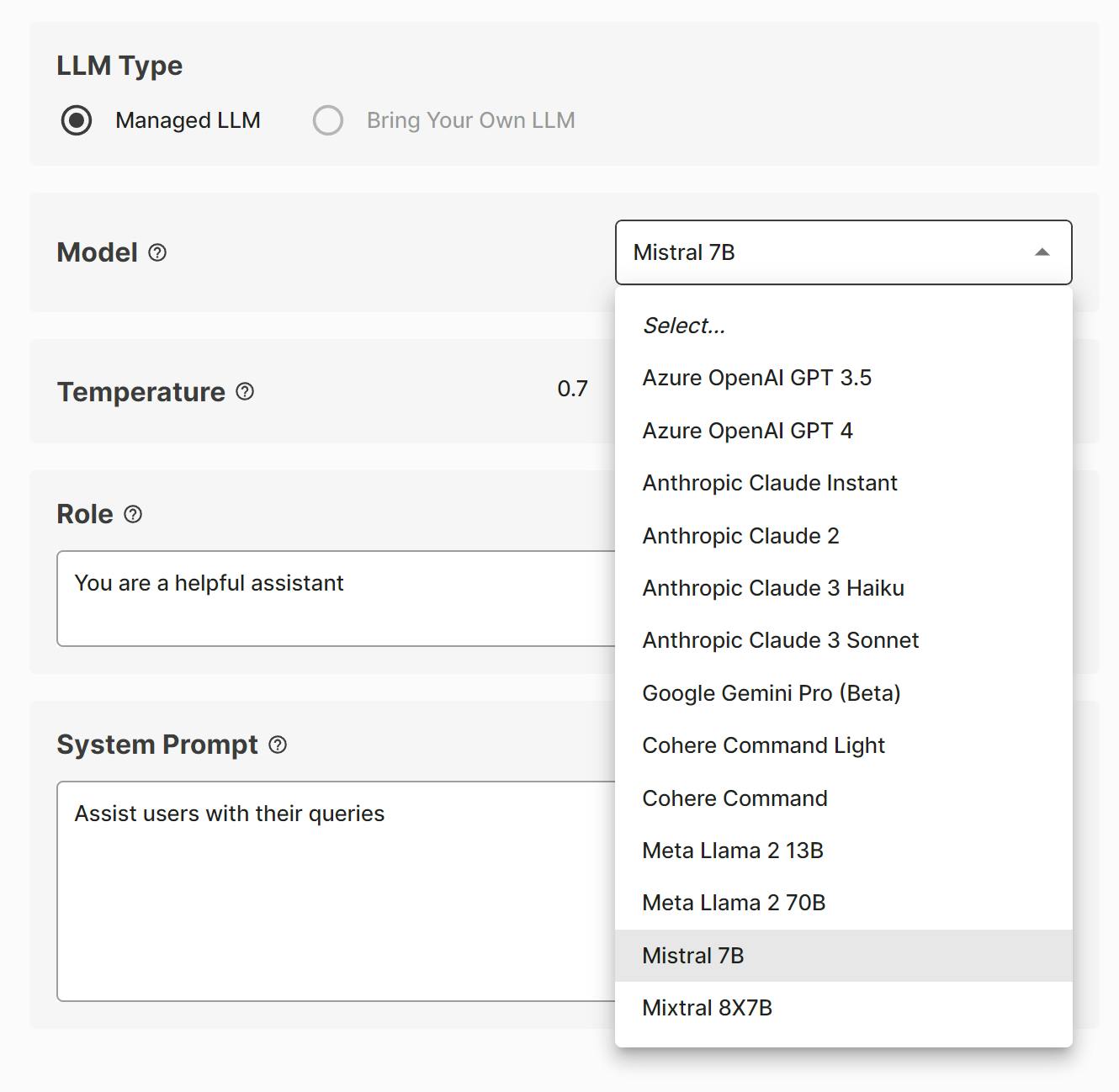

Expanded Model Options

Mistral 7B and Mixtral 8x7B

We are adding two powerful model options to cater to diverse project requirements. The Mistral 7B, a 7B dense Transformer model, offers fast deployment and customization for various use cases, supporting English and code. For projects demanding more robust capabilities, the Mixtral 8x7B leverages a 7B sparse Mixture-of-Experts model, with 12B active parameters out of 45B total, supporting multiple languages and code.

With Vext, you now have a very powerful lineup of models to choose from for various kinds of use cases, from simple classifier, basic chat bot, to complex automation.

AI Project Interface Improvements

Larger Input Field

In response to user feedback, we replaced the list format for system prompts with a larger, single input field. This change grants developers more freedom and flexibility in crafting system prompts, facilitating creativity and efficiency in project development.